The database engine determines the maximum disk I/O size used during multiblock reads (for example, full table scans or index fast full scans) by multiplying the values of the db_block_size and db_file_multiblock_read_count initialization parameters. The db_file_multiblock_read_count initialization parameter can be set explicitly, or, as of version 10.2, it’s also possible to instruct the database engine to automatically configure it. For the latter, simply don’t set it.

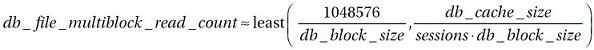

About the value which is automatically determined by the database engine the Oracle Database 12c Reference Guide gives us the following information:

The default value of this parameter is a value that corresponds to the maximum I/O size that can be performed efficiently. This value is platform-dependent and is 1MB for most platforms.

Because the parameter is expressed in blocks, it will be set to a value that is equal to the maximum I/O size that can be performed efficiently divided by the standard block size. Note that if the number of sessions is extremely large the multiblock read count value is decreased to avoid the buffer cache getting flooded with too many table scan buffers.

Unfortunately, the Reference Guide provides no precise information about what an extremely large number of sessions is.

To provide concrete information about it, as soon as the feature appeared back in 2005, I did tests to find out what the database engine does. Those tests resulted in the formula I published in Troubleshooting Oracle Performance (page 178 in the 1st edition and page 281 in the 2nd edition).

An interesting thing to point out is that the feature is not supposed to work like that. Specifically, it should not depend on the maximum number of sessions that an instance supports and/or the size of the database buffer cache.

How I know it?

Well, back in the summer of 2005, while testing the feature, I was puzzled by what I found. Specifically, that depending on how the auto SGA management sized the database buffer cache, different values of db_file_multiblock_read_count are computed. As a result, when using auto SGA management, every time an instance is bounced, the database engine can set a different value for db_file_multiblock_read_count and, therefore, causing some instability in the performance of multiblock reads. That same summer, on the 7th of September, I had the chance to participate to an event called “Development meets Customers” organized by Oracle in Frankfurt. There I had the chance to meet the developers Benoît Dageville, Mohamed Zait and Andrew Witkowski. I discussed the issue I “discovered” few weeks before with Benoît. Interestingly, he immediately told me that that was not the way the feature was supposed to work. It was designed differently. But, when I show him my test case, he had to admit that it actually worked that way! After that discussion we agreed that I would open and service request and, few weeks later, bug number 4664866 was opened. It was the 10th of October 2015. Exactly 10 years ago.

10 years after, the bug is still there, it can also be reproduced in version 12.1.0.2. And, even though it’s still recognized as a bug, it looks like nobody cares about it.

So, join me in celebrating its 10th anniversary!

Chris

I checked the bug and I think this falls into the “slipped through the cracks” area as the two people who were active on the bug left Oracle back in 2012. I’ve assigned the bug to the owner of the buffer cache area to see if it needs to be re-prioritized / reassigned.

Pete

Thank you very much, Pete!

thank you for sharing those findings.

Regards,

Foued

If it takes 10 years for something as simple as that, how long will it take to fix OJVM patches to be rolling ?!?

With Direct Path Read, how this is calculated. Since there will not be use of buffer cache, then it should not come into calculation. any idea ?

Hi

The decision of either doing buffered or direct reads is taken by the execution engine. The query optimizer has no clue about it. Hence, there is no calculation that takes it in consideration.

Best,

Chris